Table of Contents

ToggleTikTok's Mental Health Risks: Alarming Findings from a Recent Experiment

Despite TikTok's claims of having over 50 security, privacy, and protection settings for teenagers, concerns about its impact on mental health remain. An experiment testing two fictional minor accounts revealed that TikTok's algorithm quickly exposed users to content that normalizes self-harm and suicide. Mental health experts warn that the platform is failing in its crucial mission to prevent psychological suffering from spiraling into an unending loop.

Experiment Details and Findings

The investigation used accounts for fictional minors named Mario and Laura, ages 13 and 15. Six mental health professionals evaluated the content accessible to these accounts and issued alarming conclusions about TikTok's potential risks to young users. Luis Fernando López-Martínez, a psychologist and professor at the Complutense University of Madrid, stated, “It doesn't automatically induce harmful behavior, but it raises the likelihood of harm for vulnerable individuals, impacting traditional protective factors like seeking adult support.”

TikTok stands as the most popular social network among Spanish youth, with a Qustodio report noting that adolescents spend an average of 115 minutes daily on the app. Additional studies indicate that one in five adolescents, ages 12 to 18, exceeds two hours per day on the platform, which correlates with a diminished ability to set boundaries regarding app usage.

European Commission's Scrutiny

Recently, the European Commission preliminarily determined that TikTok violates the Digital Services Act (DSA) due to its “addictive design.” Features such as infinite scrolling, autoplay, and a personalized recommendation system may harm the physical and mental well-being of users, particularly minors. Despite TikTok's defense against these claims, the company could face fines of up to 6% of its annual revenue if the findings are confirmed.

Algorithm and User Experience

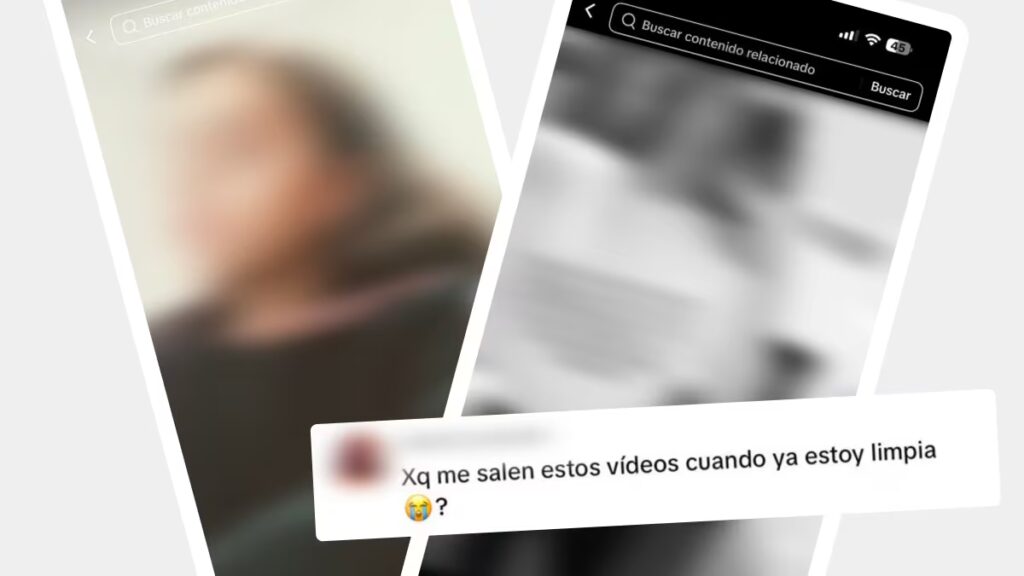

The experiment highlighted how swiftly TikTok's algorithm can lead users to harmful content. Within minutes of engaging with specific searches, the feed began recommending videos that trivialize suicide and promote self-harm. José González, a psychologist specializing in grief and suicide prevention, pointed out that frequent exposure to such content can encourage imitation and normalize harmful behaviors among impressionable youth.

Legal Actions Against TikTok

Families in France and the United States have filed lawsuits against TikTok, claiming that its recommendations contributed to the suicides of minors. The platform is also facing a broader lawsuit in the U.S. regarding its effects on minors' mental health. Research indicates that while social media can aid in suicide prevention, there is a concerning link between heavy use and increased risk of suicidal ideation.

Impact of Algorithmic Design

Mental health experts express concern over TikTok's algorithm, which reinforces particular interests, consequently curating content that may not be age-appropriate. Aurora Alés Portillo, a specialist in mental health nursing, warns that teenagers often seek validation from strangers—often reinforcing harmful behaviors—rather than obtaining support from trusted adults.

Statistics on Suicide Among Youth

Suicide ranks as the third leading cause of death among individuals aged 15 to 29, according to the World Health Organization. Data indicates that while boys have higher overall rates, the suicide rate among girls aged 15 to 19 has reached a four-decade high.

Teen Behavior on TikTok

Many TikTok users turn to the app for solutions to mental health issues, often encountering harmful content rather than specialized support. Alarmingly, TikTok contains trends related to self-harm and suicide, despite its stated policies against such content. Search results for sensitive terms prompt messages indicating crisis support is available, including a hotline.

TikTok claims to have numerous security settings activated by default for teen accounts and a process for deleting underage accounts. Still, experts question the effectiveness of these age-verification measures. Reports of “secret accounts” and users identifying as under 13 suggest these measures may not adequately prevent access.

Potential Solutions and Recommendations

Experts advise gradual and supervised introduction of social media for minors, while emphasizing the importance of open communication about harmful content. They recommend prioritizing supervision in communal spaces, establishing clear limits, and utilizing parental control tools. Notably, experts caution that punitive measures can lead to increased secrecy among teenagers.

Legal Accountability for Platforms

As discussions about regulations intensify, Spanish President Pedro Sánchez revealed plans to legislate accountability for social media executives, holding them criminally liable for failing to remove illegal content. Experts argue that TikTok must take responsibility for the content it promotes, particularly concerning vulnerable groups.

As mental health concerns surrounding TikTok continue to be a pressing issue, experts emphasize the need for the platform to demonstrate its commitment to user safety and to mitigate risks for its younger audience.