Table of Contents

ToggleThe Rise of Artificial Intelligence in Content Creation

Artificial intelligence (AI) is becoming increasingly pervasive in our digital landscape. A significant portion of online content is now generated by machines, making it challenging to distinguish between authentic information and the noise created by bots. Aaron Harris, the global chief technology officer (CTO) at Sage, warns that the proliferation of automated content complicates the identification of truth in information.

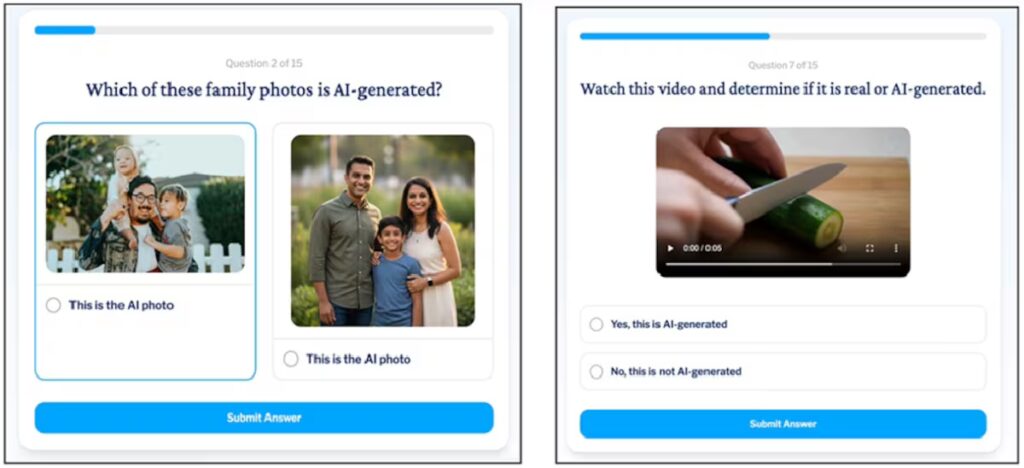

Detecting Misinformation: A Study

The Care Side, an Australian home care organization, conducted a study involving over 3,000 participants to explore misinformation detection skills, revealing significant age disparities. While individuals under 29 were able to identify eight out of ten instances of false content such as deepfake materials, those aged 65 and above succeeded only slightly above chance levels. This difference illustrates a generational gap in the ability to discern fake from real content.

The Threat of AI-Driven Fraud

The potential for manipulation and misinformation extends beyond content creation, as cybercriminals increasingly leverage AI to perpetrate fraud. Josep Albors, head of Research and Awareness at ESET Spain, highlights that scammers are now crafting convincing campaigns using AI-generated images and videos of public figures, making it increasingly difficult for individuals to identify fraudulent content.

Hervé Lambert, customer service operations manager at Panda Security, recounts a personal experience where he received a call with a voice mimicking one of his managers requesting dubious actions. Upon verification, he discovered it was a scam.

Understanding Generational Vulnerability

Lambert underscores the importance of digital education across all ages, arguing that the issue of AI manipulation and fraud is not confined to a specific generation. He notes that increased digital exposure can enhance detection skills, as demonstrated by his own performance on the Care Side quiz, which outperformed younger individuals despite his age.

Marti DeLiema, PhD in Gerontology and Deputy Director of Education at the Center for Healthy Aging and Innovation at the University of Minnesota, reinforces this perspective, stating that AI is transforming the fraud landscape for everyone.

Lambert attributes the vulnerability to a combination of lack of knowledge, awareness, and training in recognizing fraudulent scenarios. He points to the evolution of threats over the past decade, emphasizing the need for heightened vigilance.

Building Trust in Communication

To combat the rise of AI-driven scams, Lambert established a secret code phrase with his children to verify communications that may seem suspicious, particularly during their travels. Moreover, he warns that relying solely on tools designed to detect AI-generated content may not eliminate risks. He insists on the necessity of education in real-world scenarios to empower individuals to recognize potential threats.

Detecting and Avoiding AI-Driven Fraud

According to Albors, there are several strategies individuals can employ to detect false content:

- Text: Be cautious of generic greetings, urgent deadlines, and unsolicited requests for personal information. Always reread messages for errors or unnatural phrasing.

- Image: Look for unusual symmetries, malformations, or mismatched shadows and reflections in images. AI-generated images often exhibit these characteristics.

- Audio: AI-generated voice messages may contain robotic rhythms, unnatural pauses, or emotional tones that don't align with the content. Listen for imprecise responses that deviate from normal conversation flow.

- Video: The same principles apply to videos; watch for inconsistencies in facial gestures, lip synchronization, and unnatural expressions.

By understanding these guidelines, individuals can better protect themselves from misinformation and AI-enhanced fraud.

![Exploring Women's Porn: Insights from Creators in [Location] 9 - Instant Vista Exploring Women's Porn: Insights from Creators in [Location]](https://instantvista.com/wp-content/smush-webp/2026/01/Exploring-Womens-Porn-Insights-from-Creators-in-Location-150x150.jpg.webp)